Dynamic Multipoint VPN (DMVPN)

Do not follow instructions here until this notice is removed. |

http://alpinelinux.org/about under Why the Name Alpine? states: [ref?]

The first open-source implementation of Cisco's DMVPN, called OpenNHRP, was written for Alpine Linux.

So the aim of this document is to be the reference Linux DMVPN setup, with all the networking services needed for the clients that will use the DMVPN (DNS, DHCP, firewall, etc.).

Terminology

NBMA: Non-Broadcast Multi-Access network as described in RFC 2332

Hub: the Next Hop Server (NHS) performing the Next Hop Resolution Protocol service within the NBMA cloud.

Spoke: the Next Hop Resolution Protocol Client (NHC) which initiates NHRP requests of various types in order to obtain access to the NHRP service.

Extract Certificates

We will use certificates for DMVPN and for OpenVPN (RoadWarrior clients). Here are the general purpose instruction for extracting certificates from pfx files:

openssl pkcs12 -in cert.pfx -cacerts -nokeys -out cacert.pem openssl pkcs12 -in cert.pfx -nocerts -nodes -out serverkey.pem openssl pkcs12 -in cert.pfx -nokeys -clcerts -out cert.pem

Remember to set appropriate permission for your certificate files:

chmod 600 *.pem *.pfx

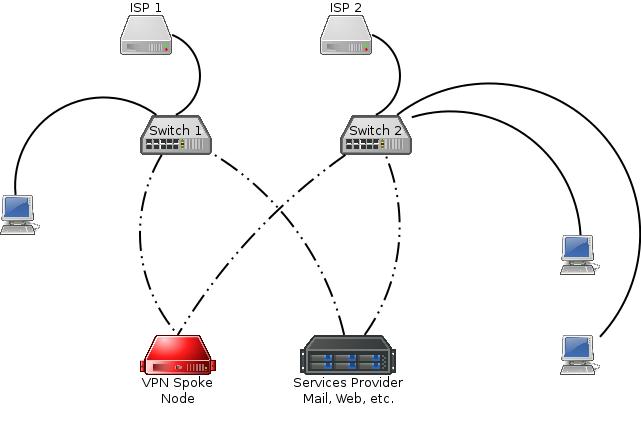

Spoke Node

A local spoke node network has support for multiple ISP connections, along with redundant layer 2 switches. At least one 802.1q capable switch is required, and a second is optional for redundancy purposes. The typical spoke node network looks like:

Alpine Setup

We will setup the network interfaces as follows:

bond0.3 = Management (not implemented below yet)

bond0.8 = LAN

bond0.64 = DMZ

bond0.80 = Voice (not implemented below yet)

bond0.96 = Internet Access Only (no access to the DMVPN network)(not implemented below yet)

bond0.256 = ISP1

bond0.257 = ISP2

Boot Alpine in diskless mode and run setup-alpine

| You will be prompted something like this... | Suggestion on what you could enter... |

|---|---|

Select keyboard layout [none]:

|

Type an appropriate layout for you |

Select variant:

|

Type an appropriate layout for you (if prompted) |

Enter system hostname (short form, e.g. 'foo') [localhost]:

|

Enter the hostname, e.g. vpnc |

Available interfaces are: eth0

|

Enter bond0.8 |

Available bond slaves are: eth0 eth1

|

eth0 eth1 |

IP address for bond0? (or 'dhcp', 'none', '?') [dhcp]:

|

Press Enter confirming 'none' |

IP address for bond0.8? (or 'dhcp', 'none', '?') [dhcp]:

|

Enter the IP address of your LAN interface, e.g. 10.1.0.1 |

Netmask? [255.255.255.0]:

|

Press Enter confirming '255.255.255.0' or type another appropriate subnet mask |

Gateway? (or 'none') [none]:

|

Press Enter confirming 'none' |

Do you want to do any manual network configuration? [no]

|

yes |

| Make a copy of the bond0.8 configuration for bond0.64, bond0.256 and bond0.257 (optional) interfaces. Don't forget to add a gateway and a metric value for ISP interfaces when multiple gateways are set. Save and close the file (:wq) | |

DNS domain name? (e.g. 'bar.com') []:

|

Enter the domain name of your intranet, e.g., example.net |

DNS nameservers(s)? []:

|

8.8.8.8 8.8.4.4 (we will change them later) |

Changing password for root

|

Enter a secure password for the console |

Retype password:

|

Retype the above password |

Which timezone are you in? ('?' for list) [UTC]:

|

Press Enter confirming 'UTC' |

HTTP/FTP proxy URL? (e.g. 'http://proxy:8080', or 'none') [none]

|

Press Enter confirming 'none' |

Enter mirror number (1-9) or URL to add (or r/f/e/done) [f]:

|

Select a mirror close to you and press Enter |

Which SSH server? ('openssh', 'dropbear' or 'none') [openssh]:

|

Press Enter confirming 'openssh' |

Which NTP client to run? ('openntpd', 'chrony' or 'none') [chrony]:

|

Press Enter confirming 'chrony' |

Which disk(s) would you like to use? (or '?' for help or 'none') [none]:

|

Press Enter confirming 'none' or type 'none' if needed |

Enter where to store configs ('floppy', 'usb' or 'none') [usb]:

|

Press Enter confirming 'usb' |

Enter apk cache directory (or '?' or 'none') [/media/usb/cache]:

|

Press Enter confirming '/media/usb/cache' |

Bonding

Update the bonding configuration:

echo bonding mode=balance-tlb miimon=100 updelay=500 >> /etc/modules

Physically install

At this point, you're ready to connect the VPN Spoke Node to the network if you haven't already done so. Please set up an 802.1q capable switch with the VLANs listed in AlpineSetup section. Once done, tag all of the VLANs on one port. Connect that port to eth0. Then, connect your first ISP's CPE to a switchport with VLAN 256 untagged.

SSH

Remove password authentication and DNS reverse lookup:

sed -i "s/.PasswordAuthentication yes/PasswordAuthentication no/" /etc/ssh/sshd_config sed -i "s/.UseDNS yes/UseDNS no/" /etc/ssh/sshd_config

Recursive DNS

apk add -U unbound

With your favorite editor open /etc/unbound/unbound.conf and add the following configuration. If you have a domain that you want unbound to resolve but is internal to your network only, the stub-zone stanza is present:

server:

verbosity: 1

interface: 10.1.0.1

do-ip4: yes

do-ip6: no

do-udp: yes

do-tcp: yes

do-daemonize: yes

access-control: 10.1.0.0/16 allow

access-control: 127.0.0.0/8 allow

do-not-query-localhost: no

root-hints: "/etc/unbound/root.hints"

stub-zone:

name: "location1.example.net"

stub-addr: 10.1.0.2

stub-zone:

name: "example.net"

stub-addr: 172.16.255.1

stub-addr: 172.16.255.2

stub-addr: 172.16.255.3

stub-addr: 172.16.255.4

stub-addr: 172.16.255.5

stub-addr: 172.16.255.7

stub-zone:

name: "example2.net"

stub-addr: 172.16.255.1

stub-addr: 172.16.255.2

stub-addr: 172.16.255.3

stub-addr: 172.16.255.4

stub-addr: 172.16.255.5

stub-addr: 172.16.255.7

python:

remote-control:

control-enable: no

Start unbound:

/etc/init.d/unbound start rc-update add unbound echo nameserver 10.1.0.1 > /etc/resolv.conf

Local DNS Zone

If you have a DNS zone that is only resolvable internally to your network, you will need a 2nd IP address on your LAN interface, and use NSD to host the zone.

First, add the following to the end of the bond0.8 stanza in /etc/network/interfaces:

auto bond0.8

...

...

up ip addr add 10.1.0.2/24 dev bond0.8

Then, install nsd:

apk add nsd

Create /etc/nsd/nsd.conf:

server:

ip-address: 10.1.0.2

port: 53

server-count: 1

ip4-only: yes

hide-version: yes

identity: ""

zonesdir: "/etc/nsd"

zone:

name: location1.example.net

zonefile: location1.example.net.zone

Create zonefile in /etc/nsd/location1.example.net.zone:

;## location1.example.net authoritative zone

$ORIGIN location1.example.net.

$TTL 86400

@ IN SOA ns1.location1.example.net. webmaster.location1.example.net. (

2013081901 ; serial

28800 ; refresh

7200 ; retry

86400 ; expire

86400 ; min TTL

)

NS ns1.location1.example.net.

MX 10 mail.location1.example.net.

ns IN A 10.1.0.2

mail IN A 10.1.0.4

Check configuration then start:

nsd-checkconf /etc/nsd/nsd.conf nsdc rebuild /etc/init.d/nsd start rc-update add nsd

GRE Tunnel

With your favorite editor open /etc/network/interfaces and add the following:

auto gre1

iface gre1 inet static

pre-up ip tunnel add $IFACE mode gre ttl 64 tos inherit key 12.34.56.78 || true

address 172.16.1.1

netmask 255.255.0.0

post-down ip tunnel del $IFACE || true

Save and close the file.

ifup gre1

IPSEC

apk add ipsec-tools

With your favorite editor create /etc/ipsec.conf and set the content to the following:

spdflush; spdadd 0.0.0.0/0 0.0.0.0/0 gre -P out ipsec esp/transport//require; spdadd 0.0.0.0/0 0.0.0.0/0 gre -P in ipsec esp/transport//require;

mkdir /etc/racoon/

Extract your pfx into /etc/racoon, using the filenames ca.pem, cert.pem, and key.pem.

With your favorite editor create /etc/racoon/racoon.conf and set the content to the following:

path certificate "/etc/racoon/";

remote anonymous {

exchange_mode main;

lifetime time 2 hour;

certificate_type x509 "/etc/racoon/cert.pem" "/etc/racoon/key.pem";

ca_type x509 "/etc/racoon/ca.pem";

my_identifier asn1dn;

nat_traversal on;

script "/etc/opennhrp/racoon-ph1dead.sh" phase1_dead;

dpd_delay 120;

proposal {

encryption_algorithm aes 256;

hash_algorithm sha1;

authentication_method rsasig;

dh_group modp4096;

}

proposal {

encryption_algorithm aes 256;

hash_algorithm sha1;

authentication_method rsasig;

dh_group 2;

}

}

sainfo anonymous {

pfs_group 2;

lifetime time 2 hour;

encryption_algorithm aes 256;

authentication_algorithm hmac_sha1;

compression_algorithm deflate;

}

Edit /etc/conf.d/racoon and unset RACOON_PSK_FILE:

... RACOON_PSK_FILE= ...

Save and close the file.

/etc/init.d/racoon start rc-update add racoon

Next Hop Resolution Protocol (NHRP)

apk add opennhrp

With your favorite editor open /etc/opennhrp/opennhrp.conf and change the content to the following:

interface gre1 dynamic-map 172.16.0.0/16 hub.example.com shortcut redirect non-caching interface bond0.8 shortcut-destination interface bond0.64 shortcut-destination

With your favorite editor open /etc/opennhrp/opennhrp-script and change the content to the following:

#!/bin/sh

MYAS=$(sed -n 's/router bgp \(\d*\)/\1/p' < /etc/quagga/bgpd.conf)

case $1 in

interface-up)

echo "Interface $NHRP_INTERFACE is up"

if [ "$NHRP_INTERFACE" = "gre1" ]; then

ip route flush proto 42 dev $NHRP_INTERFACE

ip neigh flush dev $NHRP_INTERFACE

vtysh -d bgpd \

-c "configure terminal" \

-c "router bgp $MYAS" \

-c "no neighbor core" \

-c "neighbor core peer-group"

fi

;;

peer-register)

;;

peer-up)

if [ -n "$NHRP_DESTMTU" ]; then

ARGS=`ip route get $NHRP_DESTNBMA from $NHRP_SRCNBMA | head -1`

ip route add $ARGS proto 42 mtu $NHRP_DESTMTU

fi

echo "Create link from $NHRP_SRCADDR ($NHRP_SRCNBMA) to $NHRP_DESTADDR ($NHRP_DESTNBMA)"

racoonctl establish-sa -w isakmp inet $NHRP_SRCNBMA $NHRP_DESTNBMA || exit 1

racoonctl establish-sa -w esp inet $NHRP_SRCNBMA $NHRP_DESTNBMA gre || exit 1

;;

peer-down)

echo "Delete link from $NHRP_SRCADDR ($NHRP_SRCNBMA) to $NHRP_DESTADDR ($NHRP_DESTNBMA)"

racoonctl delete-sa isakmp inet $NHRP_SRCNBMA $NHRP_DESTNBMA

ip route del $NHRP_DESTNBMA src $NHRP_SRCNBMA proto 42

;;

nhs-up)

echo "NHS UP $NHRP_DESTADDR"

(

flock -x 200

vtysh -d bgpd \

-c "configure terminal" \

-c "router bgp $MYAS" \

-c "neighbor $NHRP_DESTADDR remote-as 65000" \

-c "neighbor $NHRP_DESTADDR peer-group core" \

-c "exit" \

-c "exit" \

-c "clear bgp $NHRP_DESTADDR"

) 200>/var/lock/opennhrp-script.lock

;;

nhs-down)

(

flock -x 200

vtysh -d bgpd \

-c "configure terminal" \

-c "router bgp $MYAS" \

-c "no neighbor $NHRP_DESTADDR"

) 200>/var/lock/opennhrp-script.lock

;;

route-up)

echo "Route $NHRP_DESTADDR/$NHRP_DESTPREFIX is up"

ip route replace $NHRP_DESTADDR/$NHRP_DESTPREFIX proto 42 via $NHRP_NEXTHOP dev $NHRP_INTERFACE

ip route flush cache

;;

route-down)

echo "Route $NHRP_DESTADDR/$NHRP_DESTPREFIX is down"

ip route del $NHRP_DESTADDR/$NHRP_DESTPREFIX proto 42

ip route flush cache

;;

esac

exit 0

Save and close the file. Make it executable:

chmod +x /etc/opennhrp/opennhrp-script /etc/init.d/opennhrp start rc-update add opennhrp

BGP

apk add quagga touch /etc/quagga/zebra.conf

With your favorite editor open /etc/quagga/bgpd.conf and change the content to the following:

password strongpassword

enable password strongpassword

log syslog

access-list 1 remark Command line access authorized IP

access-list 1 permit 127.0.0.1

line vty

access-class 1

hostname vpnc.example.net

router bgp 65001

bgp router-id 172.16.1.1

network 10.1.0.0/16

neighbor %HUB_GRE_IP% remote-as 65000

neighbor %HUB_GRE_IP% remote-as 65000

...

Add the line neighbor %HUB_GRE_IP% remote-as 65000 for each Hub host you have in your NBMA cloud.

Save and close the file.

/etc/init.d/bgpd start rc-update add bgpd

OpenVPN

echo tun >> /etc/modules modprobe tun apk add openvpn openssl openssl dhparam -out /etc/openvpn/dh1024.pem 1024

Set up the config in /etc/openvpn/openvpn.conf

dev tun proto udp port 1194 server 10.1.128.0 255.255.255.0 push "route 10.0.0.0 255.0.0.0" push "dhcp-option DNS 10.1.0.1" tls-server ca /etc/openvpn/cacert.pem cert /etc/openvpn/servercert.pem key /etc/openvpn/serverkey.pem crl-verify /etc/openvpn/crl.pem dh /etc/openvpn/dh1024.pem persist-key persist-tun keepalive 10 120 comp-lzo status /var/log/openvpn.status mute 20 verb 3

/etc/init.d/openvpn start rc-update add openvpn

Firewall

apk add awall

With your favorite editor, edit the following files and set their contents as follows:

/etc/awall/optional/params.json

{

"description": "params",

"variable": {

"B_IF" = "bond0.8",

"C_IF" = "bond0.64",

"ISP1_IF" = "bond0.256",

"ISP2_IF" = "bond0.257"

}

}

/etc/awall/optional/internet-host.json

{

"description": "Internet host",

"import": "params",

"zone": {

"E": { "iface": [ "$ISP1_IF", "$ISP2_IF" ] },

"ISP1": { "iface": "$ISP1_IF" },

"ISP2": { "iface": "$ISP2_IF" }

},

"filter": [

{

"in": "E",

"service": "ping",

"action": "accept",

"flow-limit": { "count": 10, "interval": 6 }

},

{

"in": "E",

"out": "_fw",

"service": [ "ssh", "https" ],

"action": "accept",

"conn-limit": { "count": 3, "interval": 60 }

},

{

"in": "_fw",

"out": "E",

"service": [ "dns", "http", "ntp" ],

"action": "accept"

},

{

"in": "_fw",

"service": [ "ping", "ssh" ],

"action": "accept"

}

]

}

/etc/awall/optional/openvpn.json

{

"description": "OpenVPN support",

"import": "internet-host",

"service": {

"openvpn": { "proto": "udp", "port": 1194 }

},

"filter": [

{ "in": "E", "out": "_fw", "service": "openvpn", "action": "accept" }

]

}

/etc/awall/optional/clampmss.json

{

"description": "Deal with ISPs afraid of ICMP",

"import": "internet-host",

"clamp-mss": [ { "out": "E" } ]

}

/etc/awall/optional/mark.json

{

"description": "Mark traffic based on ISP",

"import": [ "params", "internet-host" ],

"route-track": [

{ "out": "ISP1", "mark": 1 },

{ "out": "ISP2", "mark": 2 }

]

}

/etc/awall/optional/dmvpn.json

{

"description": "DMVPN router",

"import": "internet-host",

"variable": {

"A_ADDR": [ "10.0.0.0/8", "172.16.0.0/16" ]

},

"zone": {

"A": { "addr": "$A_ADDR", "iface": "gre1" }

},

"filter": [

{ "in": "E", "out": "_fw", "service": "ipsec", "action": "accept" },

{ "in": "_fw", "out": "E", "service": "ipsec", "action": "accept" },

{

"in": "E",

"out": "_fw",

"ipsec": "in",

"service": "gre",

"action": "accept"

},

{

"in": "_fw",

"out": "E",

"ipsec": "out",

"service": "gre",

"action": "accept"

},

{ "in": "_fw", "out": "A", "service": "bgp", "action": "accept" },

{ "in": "A", "out": "_fw", "service": "bgp", "action": "accept"},

{ "out": "E", "dest": "$A_ADDR", "action": "reject" }

]

}

/etc/awall/optional/vpnc.json

{

"description": "VPNc",

"import": [ "params", "internet-host", "dmvpn" ],

"zone": {

"B": { "iface": "$B_IF" },

"C": { "iface": "$C_IF" }

},

"policy": [

{ "in": "A", "action": "accept" },

{ "in": "B", "out": "A", "action": "accept" },

{ "in": "C", "out": [ "A", "E" ], "action": "accept" },

{ "in": "E", "action": "drop" },

{ "in": "_fw", "out": "A", "action": "accept" }

],

"snat": [

{ "out": "E" }

],

"filter": [

{

"in": "A",

"out": "_fw",

"service": [ "ping", "ssh", "http", "https" ],

"action": "accept"

},

{

"in": [ "B", "C" ],

"out": "_fw",

"service": [ "dns", "ntp", "http", "https", "ssh" ],

"action": "accept"

},

{

"in": "_fw",

"out": [ "B", "C" ],

"service": [ "dns", "ntp" ],

"action": "accept"

},

{

"in": [ "A", "B", "C" ],

"out": "_fw",

"proto": "icmp",

"action": "accept"

}

]

}

modprobe ip_tables modprobe iptable_nat awall enable clampmss awall enable openvpn awall enable vpnc awall activate rc-update add iptables

ISP Failover

apk add pingu echo -e "1\tisp1">> /etc/iproute2/rt_tables echo -e "2\tisp2">> /etc/iproute2/rt_tables

Configure pingu to monitor our bond0.256 and bond0.257 interfaces in /etc/pingu/pingu.conf. Add the hosts to monitor for ISP failover to /etc/pingu/pingu.conf and bind to primary ISP. We also set the ping timeout to 4 seconds.:

timeout 4

required 2

retry 11

interface bond0.256 {

# route-table must correspond with mark in /etc/awall/optional/mark.json

route-table 1

fwmark 1

rule-priority 20000

# google dns

ping 8.8.8.8

# opendns

ping 208.67.222.222

}

interface bond0.257 {

# route-table must correspond with mark in /etc/awall/optional/mark.json

route-table 2

fwmark 2

rule-priority 20000

}

Make sure we can reach the public IP from our LAN by adding static route rules for our private net(s). Edit /etc/pingu/route-rules:

to 10.0.0.0/8 table main prio 1000 to 172.16.0.0/12 table main prio 1000

Start pingu:

/etc/init.d/pingu start rc-update add pingu

Now, if both hosts stop responding to pings, ISP-1 will be considered down and all gateways via bond0.256 will be removed from main route table. Note that the gateway will not be removed from the route table '1'. This is so we can continue try ping via bond0.256 so we can detect that the ISP is back online. When ISP starts working again, the gateways will be added back to main route table again.

Commit Configuration

lbu ci

Hub Node

We will document only what changes from the Spoke node setup.

Routing Tables

echo -e "42\tnhrp_shortcut\n43\tnhrp_mtu\n44\tquagga\n

NHRP

With your favorite editor open /etc/opennhrp/opennhrp.conf on Hub 2 and set the content as follows:

interface gre1 map %Hub1_GRE_IP%/%MaskBit% hub1.example.org route-table 44 shortcut redirect non-caching

Do the same on Hub 1 adding the data relative to Hub 2.

With your favorite editor open /etc/opennhrp/opennhrp-script and set the content as follows:

#!/bin/sh case $1 in interface-up) ip route flush proto 42 dev $NHRP_INTERFACE ip neigh flush dev $NHRP_INTERFACE ;; peer-register) CERT=`racoonctl get-cert inet $NHRP_SRCNBMA $NHRP_DESTNBMA | openssl x509 -inform der -text -noout | egrep -o "/OU=[^/]*(/[0-9]+)?" | cut -b 5-` if [ -z "`echo "$CERT" | grep "^GRE=$NHRP_DESTADDR"`" ]; then logger -t opennhrp-script -p auth.err "GRE registration of $NHRP_DESTADDR to $NHRP_DESTNBMA DENIED" exit 1 fi logger -t opennhrp-script -p auth.info "GRE registration of $NHRP_DESTADDR to $NHRP_DESTNBMA authenticated" ( flock -x 200 AS=`echo "$CERT" | grep "^AS=" | cut -b 4-` vtysh -d bgpd -c "configure terminal" \ -c "router bgp 65000" \ -c "neighbor $NHRP_DESTADDR remote-as $AS" \ -c "neighbor $NHRP_DESTADDR peer-group leaf" \ -c "neighbor $NHRP_DESTADDR prefix-list net-$AS-in in" SEQ=5 (echo "$CERT" | grep "^NET=" | cut -b 5-) | while read NET; do vtysh -d bgpd -c "configure terminal" \ -c "ip prefix-list net-$AS-in seq $SEQ permit $NET le 26" SEQ=$(($SEQ+5)) done ) 200>/var/lock/opennhrp-script.lock ;; peer-up) echo "Create link from $NHRP_SRCADDR ($NHRP_SRCNBMA) to $NHRP_DESTADDR ($NHRP_DESTNBMA)" racoonctl establish-sa -w isakmp inet $NHRP_SRCNBMA $NHRP_DESTNBMA || exit 1 racoonctl establish-sa -w esp inet $NHRP_SRCNBMA $NHRP_DESTNBMA gre || exit 1 CERT=`racoonctl get-cert inet $NHRP_SRCNBMA $NHRP_DESTNBMA | openssl x509 -inform der -text -noout | egrep -o "/OU=[^/]*(/[0-9]+)?" | cut -b 5-` if [ -z "`echo "$CERT" | grep "^GRE=$NHRP_DESTADDR"`" ]; then logger -p daemon.err "GRE mapping of $NHRP_DESTADDR to $NHRP_DESTNBMA DENIED" exit 1 fi if [ -n "$NHRP_DESTMTU" ]; then ARGS=`ip route get $NHRP_DESTNBMA from $NHRP_SRCNBMA | head -1` ip route add $ARGS proto 42 mtu $NHRP_DESTMTU table nhrp_mtu fi ;; peer-down) echo "Delete link from $NHRP_SRCADDR ($NHRP_SRCNBMA) to $NHRP_DESTADDR ($NHRP_DESTNBMA)" if [ "$NHRP_PEER_DOWN_REASON" != "lower-down" ]; then racoonctl delete-sa isakmp inet $NHRP_SRCNBMA $NHRP_DESTNBMA fi ip route del $NHRP_DESTNBMA src $NHRP_SRCNBMA proto 42 table nhrp_mtu ;; route-up) echo "Route $NHRP_DESTADDR/$NHRP_DESTPREFIX is up" ip route replace $NHRP_DESTADDR/$NHRP_DESTPREFIX proto 42 via $NHRP_NEXTHOP dev $NHRP_INTERFACE table nhrp_shortcut ip route flush cache ;; route-down) echo "Route $NHRP_DESTADDR/$NHRP_DESTPREFIX is down" ip route del $NHRP_DESTADDR/$NHRP_DESTPREFIX proto 42 table nhrp_shortcut ip route flush cache ;; esac exit 0

BGP

With your favorite editor open /etc/quagga/bgpd.conf on Hub 2 and set the content as follows:

password zebra enable password zebra log syslog router bgp 65000 bgp router-id %Hub2_GRE_IP% bgp deterministic-med network %GRE_NETWORK%/%MASK_BITS% neighbor hub peer-group neighbor hub next-hop-self neighbor hub route-map CORE-IN in neighbor spoke peer-group neighbor spoke passive neighbor spoke next-hop-self neighbor %Spoke1_GRE_IP% remote-as 65001 neighbor %Spoke1_GRE_IP% peer-group spoke neighbor %Spoke1_GRE_IP% prefix-list net-65001-in in ... ... ... neighbor hub remote-as 65000 neighbor %Hub1_GRE_IP% peer-group core ip prefix-list net-65001-in seq 5 permit 10.1.0.0/16 le 26 ... route-map CORE-IN permit 10 set metric +100

Add the lines neighbor %Spoke1_GRE_IP%... for each spoke node you have. Do the same on Hub 1, changing the relevant data for Hub 2.

Troubleshooting the DMVPN

Broken Path MTU Discovery (PMTUD)

ISPs afraid of ICMP (which is somehow legitimate) often just blindly add no ip unreachables in their router interfaces, effectively creating a blackhole router that breaks PMTUD, since ICMP Type 3 Code 4 packets (Fragmentation Needed) are dropped. PMTUD is needed by ISAKMP that runs on UDP (TCP works because it uses CLAMPMSS).

For technical details see http://packetlife.net/blog/2008/oct/9/disabling-unreachables-breaks-pmtud/

PMTUD could also be broken due to badly configured DSL modem/routers or bugged firmware.

You can easily detect which host is the blackhole router by pinging with DF bit set and with packets of standard MTU size, each hop given in your traceroute to destination:

ping -M do -s 1472 %IP%

iputils packageIf you don't get a response back (either Echo-Response or Fragmentation-Needed) there's firewall dropping ICMP packets. If it answers to normal ping packets (DF bit cleared), most likely you have hit a blackhole router.