LXC: Difference between revisions

ScrumpyJack (talk | contribs) No edit summary |

ScrumpyJack (talk | contribs) No edit summary |

||

| Line 89: | Line 89: | ||

The solution is to create a dummy network interface, bridge that, and set up NAT so that traffic out of your bridge interface gets pushed through the interface of your choice. | The solution is to create a dummy network interface, bridge that, and set up NAT so that traffic out of your bridge interface gets pushed through the interface of your choice. | ||

So, first, lets create that dummy interface | So, first, lets create that dummy interface (thanks to ncopa for talking me out of macvlan) | ||

{{Cmd|modprobe dummy}} | {{Cmd|modprobe dummy}} | ||

| Line 106: | Line 106: | ||

Next, let's give that bridged interface a reason to exists | Next, let's give that bridged interface a reason to exists | ||

{{ Cmd | | {{ Cmd | ifconfig br0 192.168.1.1 netmask 255.255.255.0 up}} | ||

Create a file for your container, let's say /etc/lxc/bridgenat.conf, with the following settings. | Create a file for your container, let's say /etc/lxc/bridgenat.conf, with the following settings. | ||

| Line 122: | Line 122: | ||

{{ Cmd | lxc-create -n alpine -f /etc/lxc/bridgenat.conf -t alpine }} | {{ Cmd | lxc-create -n alpine -f /etc/lxc/bridgenat.conf -t alpine }} | ||

You should now be able to ping your container from your hosts, and your host from your container. | |||

Your container needs to know where to push traffic that isn't within it's subnet. To do so, we tell the container to route through the bridge interface br0 | |||

From inside the container run | |||

{{ Cmd | route add default gw 192.168.1.1 }} | |||

The next step is you push the traffic coming from your private subnet over br0 out through your internet facing interface, or any interface you chose | |||

We are messing with your IP tables here, so make sure these settings don't conflict with anything you may have already set up, obviously. | |||

Say eth0 was your internet facing network interface, and br0 is the name of the bridge you made earlier, we'd do this: | |||

{{ Cmd | echo 1 > /proc/sys/net/ipv4/ip_forward | |||

iptables --table nat --append POSTROUTING --out-interface eth0 -j MASQUERADE | |||

iptables --append FORWARD --in-interface br0 -j ACCEPT | |||

}} | |||

Now you should be able to route through your bridge interface to the internet facing interface of your host from your container, just like at home! | |||

Revision as of 17:43, 18 June 2014

Linux Containers (LXC) provides containers similar BSD Jails, Linux VServer and Solaris Zones. It gives the impression of virtualization, but shares the kernel and resources with the "host".

Installation

Install the required packages:

apk add lxc lxc-templates bridge

Prepare network on host

Set up a bridge on the host. Example /etc/network/interfaces:

auto br0

iface br0 inet dhcp

bridge-ports eth0

Create a network configuration template for the guests, /etc/lxc/lxc.conf:

lxc.network.type = veth lxc.network.link = br0 lxc.network.flags = up

Create a guest

Alpine Template

lxc-create -n guest1 -f /etc/lxc/lxc.conf -t alpine

This will create a /var/lib/lxc/guest1 directory with a config file and a rootfs directory.

Note that by default alpine template does not have networking service on, you will need to add it using lxc-console

If running on x86_64 architecture, it is possible to create a 32bit guest:

lxc-create -n guest1 -f /etc/lxc/lxc.conf -t alpine -- --arch x86

Debian template

In order to create a debian template container you will need to install some packages:

apk add debootstrap rsync

Also you will need to turn off some grsecurity chroot options otherwise the debootstrap will fail:

echo 0 > /proc/sys/kernel/grsecurity/chroot_caps echo 0 > /proc/sys/kernel/grsecurity/chroot_deny_chroot echo 0 > /proc/sys/kernel/grsecurity/chroot_deny_mount echo 0 > /proc/sys/kernel/grsecurity/chroot_deny_mknod echo 0 > /proc/sys/kernel/grsecurity/chroot_deny_chmod

Please remember to turn them back on, or just simply reboot the system.

Now you can run:

SUITE=wheezy lxc-create -n guest1 -f /etc/lxc/lxc.conf -t debian

Starting/Stopping the guest

Create a symlink to the /etc/init.d/lxc script for your guest.

ln -s lxc /etc/init.d/lxc.guest1

You can start your guest with:

/etc/init.d/lxc.guest1 start

Stop it with:

/etc/init.d/lxc.guest1 stop

Make it autostart on boot up with:

rc-update add lxc.guest1

Connecting to the guest

By default sshd is not installed, so you will have to connect to a virtual console. This is done with:

lxc-console -n guest1

To disconnect from it, press Ctrl+a q

Deleting a guest

Make sure the guest is stopped and run:

lxc-destroy -n guest1

This will erase everything, without asking any questions. It is equivalent to:

rm -r /var/lib/lxc/guest1

Advanced

Creating a LXC container without modifying your network interfaces

The problem with bridging is that the interface you bridge gets replaced with your new bridge interface. That is to say that say you have an interface eth0 that you want to bridge, your eth0 interface gets replaced with the br0 interface that you create. It also means that the interface you use needs to be placed into promiscuous mode to catch all the traffic that could de destined to the other side of the bridge, which again may not be what you want.

The solution is to create a dummy network interface, bridge that, and set up NAT so that traffic out of your bridge interface gets pushed through the interface of your choice.

So, first, lets create that dummy interface (thanks to ncopa for talking me out of macvlan)

modprobe dummy

This will create a dummy interface called dummy0

Now we will create a bridge called br0

brctl addbr br0 brctl setfd br0 0

and then make that dummy interface one end

brctl addif br0 dummy0

Next, let's give that bridged interface a reason to exists

ifconfig br0 192.168.1.1 netmask 255.255.255.0 up

Create a file for your container, let's say /etc/lxc/bridgenat.conf, with the following settings.

lxc.network.type = veth lxc.network.flags = up lxc.network.link = br0 lxc.network.name = eth1 lxc.network.ipv4 = 192.168.1.2/24

and build your container with that file

lxc-create -n alpine -f /etc/lxc/bridgenat.conf -t alpine

You should now be able to ping your container from your hosts, and your host from your container.

Your container needs to know where to push traffic that isn't within it's subnet. To do so, we tell the container to route through the bridge interface br0 From inside the container run

route add default gw 192.168.1.1

The next step is you push the traffic coming from your private subnet over br0 out through your internet facing interface, or any interface you chose

We are messing with your IP tables here, so make sure these settings don't conflict with anything you may have already set up, obviously.

Say eth0 was your internet facing network interface, and br0 is the name of the bridge you made earlier, we'd do this:

echo 1 > /proc/sys/net/ipv4/ip_forward iptables --table nat --append POSTROUTING --out-interface eth0 -j MASQUERADE iptables --append FORWARD --in-interface br0 -j ACCEPT

Now you should be able to route through your bridge interface to the internet facing interface of your host from your container, just like at home!

Using static IP

If you're using static IP, you need to configure this properly on guest's /etc/network/interfaces. To stay on the above example, modify /var/lib/lxc/guest1/rootfs/etc/network/interfaces

from

#auto lo

iface lo inet loopback

auto eth0

iface eth0 inet dhcp

to

#auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address <lxc-container-ip> # IP which the lxc container should use

gateway <gateway-ip> # IP of gateway to use, mostly same as on lxc-host

netmask <netmask>

mem and swap

vim /boot/extlinux.conf

APPEND initrd=initramfs-3.10.13-1-grsec root=UUID=7cd8789f-5659-40f8-9548-ae8f89c918ab modules=sd-mod,usb-storage,ext4 quiet cgroup_enable=memory swapaccount=1

checkconfig

lxc-checkconfig

Kernel configuration not found at /proc/config.gz; searching... Kernel configuration found at /boot/config-3.10.13-1-grsec --- Namespaces --- Namespaces: enabled Utsname namespace: enabled Ipc namespace: enabled Pid namespace: enabled User namespace: missing Network namespace: enabled Multiple /dev/pts instances: enabled --- Control groups --- Cgroup: enabled Cgroup clone_children flag: enabled Cgroup device: enabled Cgroup sched: enabled Cgroup cpu account: enabled Cgroup memory controller: missing Cgroup cpuset: enabled --- Misc --- Veth pair device: enabled Macvlan: enabled Vlan: enabled File capabilities: enabled Note : Before booting a new kernel, you can check its configuration usage : CONFIG=/path/to/config /usr/bin/lxc-checkconfig

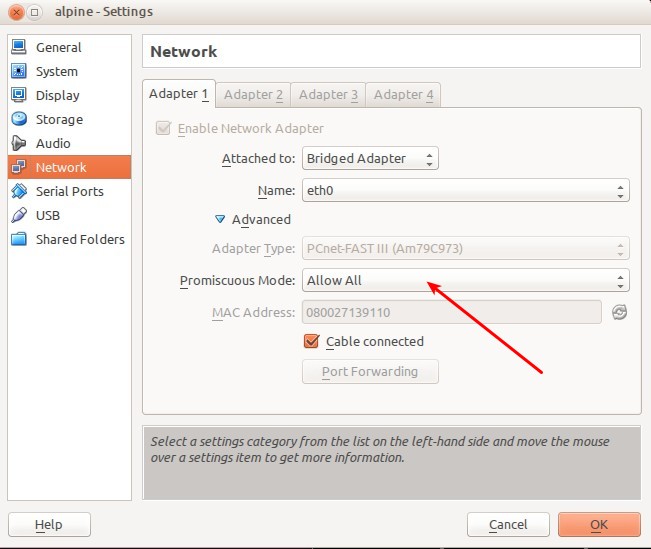

VirtualBox

In order for network to work on containers you need to set "Promiscuous Mode" to "Allow All" in VirtualBox settings for the network adapter.

LXC 1.0 Additional information

Some info regarding new features in LXC 1.0

https://www.stgraber.org/2013/12/20/lxc-1-0-blog-post-series/